AGI is not guaranteed

I have seen a lot of bold claims about AI lately around its potential implications for the workforce. For example, the Anthropic CEO said that “in 3 to 6 months, AI will be writing 90% of code.” 1 Recently at work, a coworker said “Coding will become like art, where humans will only do it for pleasure” implying that AI will be so much more productive than humans. These conversations have led me to reflect on the state of AI and its future.

Where we are today

To think about the future we need to understand where we are currently. It’s been nearly 3 years since the initial release of ChatGPT at the time of writing. Since then we have seen a lot of improvement to the underlying models. Improved context windows, better weights through better training, new protocols that allow them to interact with external systems, etc. The latest frontier is agentic models with a heavy focus on coding.

The current focus of many large tech firms is operationalizing AI agents to accelerate development and reduce the engineering headcount needed to deliver new features. With the state of current AI agents, they are capable of producing usable code in small and medium sized codebases. However, even in medium sized codebases these agents run into context window limitations and need to be used in short bursts or use some workarounds like “summarizing” context to make it smaller. Agents can be useful tools, but they appear to be just that, tools. Even with the state of the art models, they still need to be operated by a human that has some idea of what they are doing. At least in the short term the idea of a completely autonomous agent is not anywhere near practical.

Looking to the future

While the current focus of many companies is agents, many AI companies have their eyes on artificial general intelligence or AGI. There are many definitions of AGI, but I am going to define it as: A machine or software that can match peak human performance at any task. In other words, anything you can do, it can do better. Many of the leaders in the AI space and government institutions believe that we are on a crash course to achieving AGI.

This is concerning to me as there is nothing guaranteeing AGI will happen or even exists. There are important questions we need to answer first:

- Is AGI even possible?

- Is the current approach to AI a local minima?

- How will we know if AGI is achieved?

The concept of AGI can certainly exist in a perfect world. However, we do not live in a perfect world. There are many equations in physics that have theoretical solutions but realistically will never happen due to environmental constraints. I believe the bar for AGI is not completely out of reach as it only needs to surpass humans, which are also imperfect. But we still need to take in the environmental constraints like energy production, training data, etc.

Current AI models are notoriously resource intensive. Given that we do not even have a known end point for achieving AGI we also do not know how much energy is required to achieve AGI. For all we know the energy required to operate AGI could exceed the amount of available energy in our solar system. If this is the case, AGI would not be practically possible in my lifetime. I tend to believe it should be possible with much less energy, but the fact of the matter is we do not know.

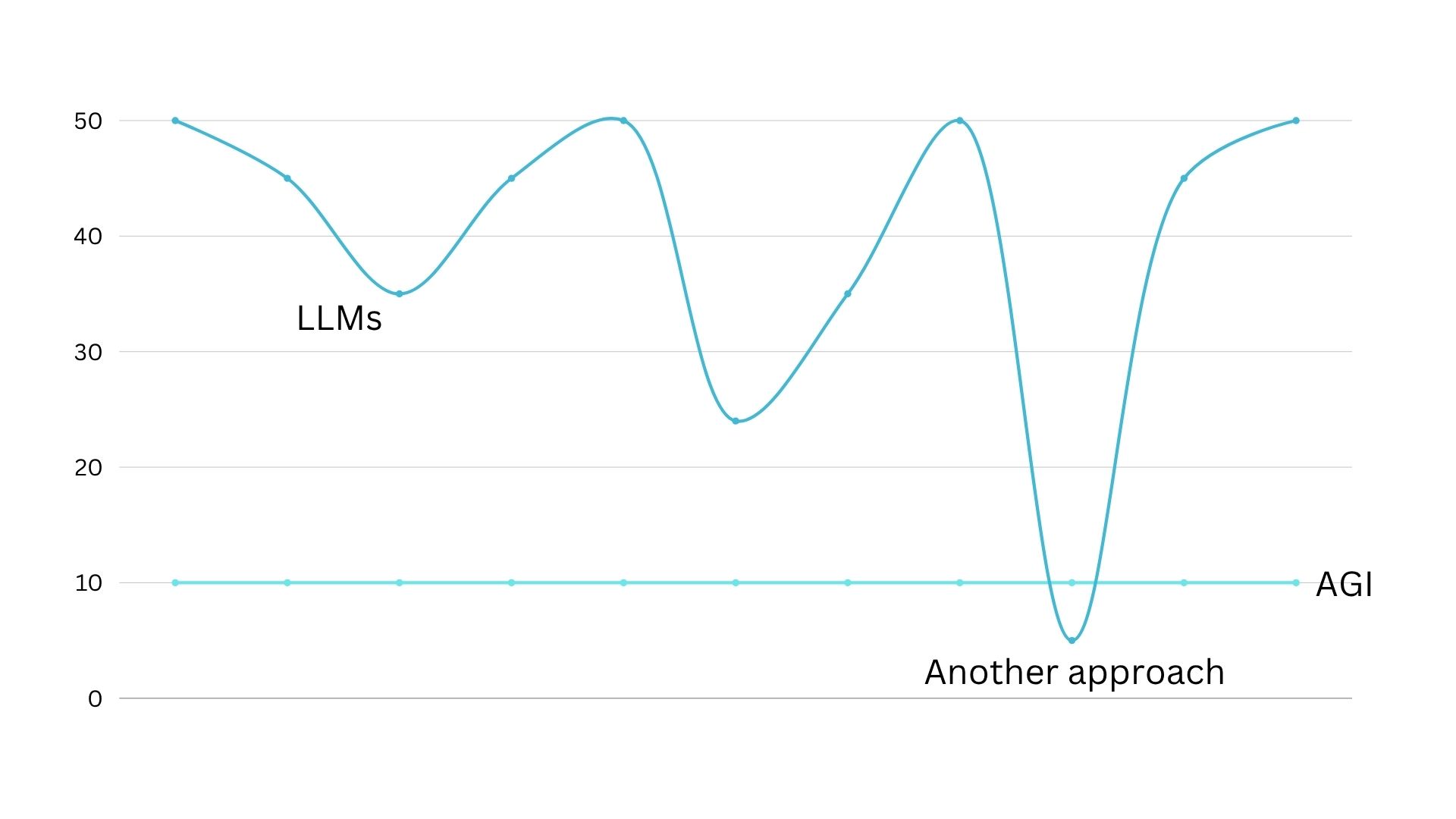

This leads me to ask is the current approach to AI even optimal in the first place? LLMs and RAGs have given us a great leap forward on AI progress but there is a potential that we are approaching a local minimum. In the short term, we see great improvements to AI capabilities but in the long term we hit limits to the algorithms and hardware. I tend to believe this is the case. Our models are very impressive from previous AI capabilities but we are starting to see diminishing returns on training and model/context size. If we want to see more large leaps it will require going backwards (likely for years) to develop a new approach to model reality.

Finally we have no way of knowing if we have achieved AGI. The best we have now are AI benchmarks and anecdotal evidence. AI benchmarks are a useful tool but it is unclear if scores relate to real world performance. We also see AI companies prioritizing these benchmarks while neglecting areas that are not tested by benchmarks.

Given the state of AI capability measurement, I suspect we will see an AI company (or government) prematurely declare AGI has been achieved. There are many incentives to do so, so I would not find this as shocking to see in the next 5 years.

Is all lost?

I tend to view the current AI hype cycle as a bubble no different than the dotcom bubble in the 1990s. All of the large AI companies are bleeding money and investors will only wait so long to see a return. The hype behind AI has not been what it can do, but what it can do now but what it will do in the future. Eventually, these companies will need to provide some path to sustainability and we have not seen any sign of that.

However, this does not mean that all AI tools are useless. In the dotcom bubble of the 1990s, many companies failed but the actually useful products like Google survived and thrived. But for us to get there we will need to live through the AI bubble.

We see a lot of companies feeling pressured to adopt AI. While there are legitimate use cases for AI, many companies are jumping on AI to please investors or simply out of FOMO. This is unfortunate for consumers of software but unlikely to change in the near future.

Once the VC money eventually dries up and hype has died down, I am optimistic that trend of putting AI into everything will stop and we will see a more conservative thoughtful use of AI in applications. AI unlocks use cases that were previously not possible but it is not a silver bullet that solves all problems and we are not guaranteed to see AGI anytime in the near future.